Code

| IDpol | ClaimNb | Exposure | Area | VehPower | VehAge | DrivAge | BonusMalus | VehBrand | VehGas | Density | Region | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

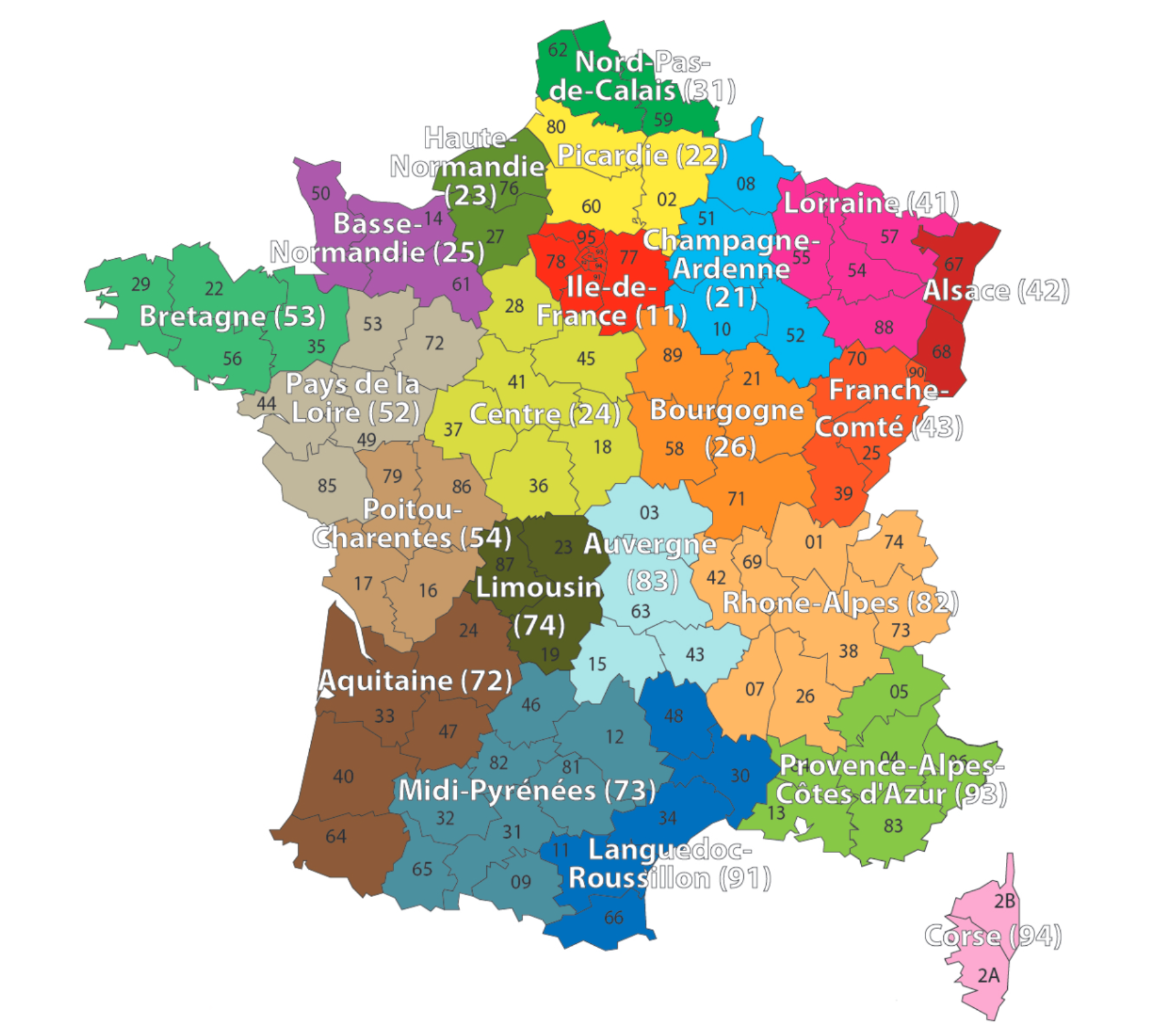

| 0 | 1.0 | 1 | 0.10000 | D | 5 | 0 | 55 | 50 | B12 | 'Regular' | 1217 | R82 |

| 1 | 3.0 | 1 | 0.77000 | D | 5 | 0 | 55 | 50 | B12 | 'Regular' | 1217 | R82 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 678011 | 6114329.0 | 0 | 0.00274 | B | 4 | 0 | 60 | 50 | B12 | 'Regular' | 95 | R26 |

| 678012 | 6114330.0 | 0 | 0.00274 | B | 7 | 6 | 29 | 54 | B12 | 'Diesel' | 65 | R72 |

678013 rows × 12 columns