Artificial Intelligence

ACTL3143 & ACTL5111 Deep Learning for Actuaries

Artificial Intelligence

Lecture Outline

Artificial Intelligence

Deep Learning Successes (Images)

Deep Learning Successes (Text)

Classifying Machine Learning Tasks

Neural Networks

Different goals of AI

Artificial intelligence describes an agent which is capable of:

| Thinking humanly | Thinking rationally |

| Acting humanly | Acting rationally |

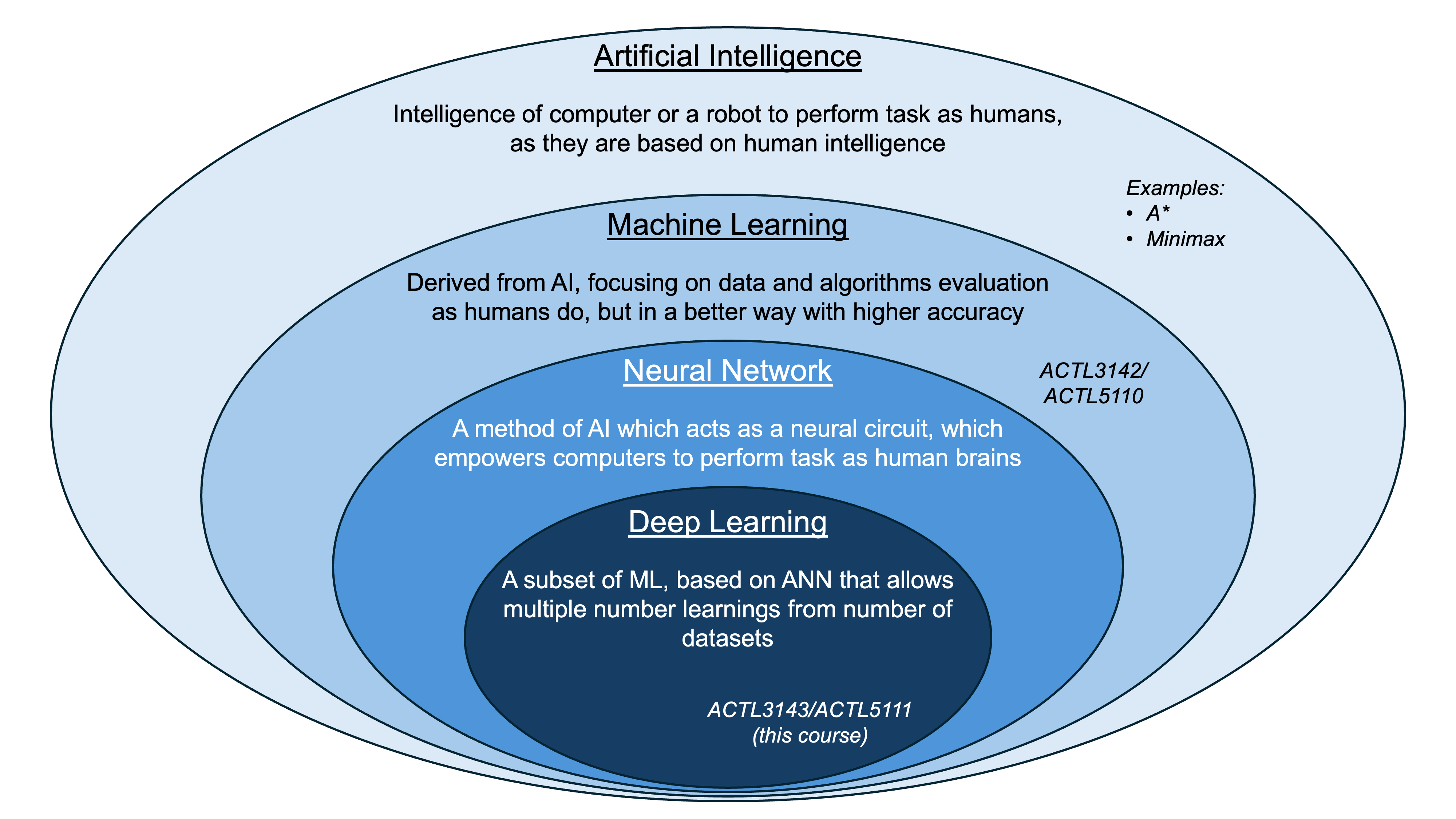

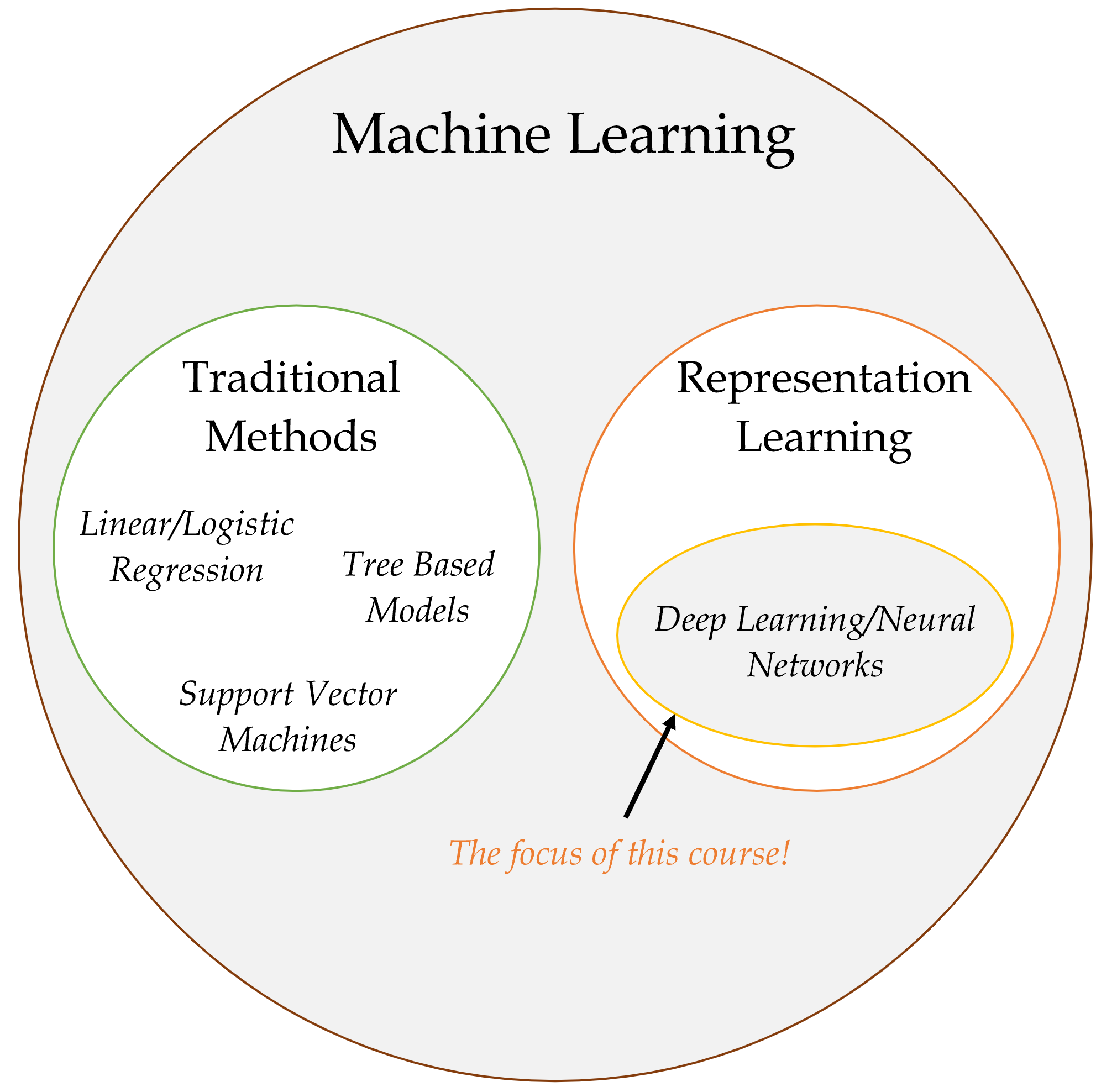

AI eventually become dominated by one approach, called machine learning, which itself is now dominated by deep learning (neural networks).

There are AI algorithms for simple tasks that don’t use machine learning though.

You can study a 12 week course on AI and never touch on machine learning…

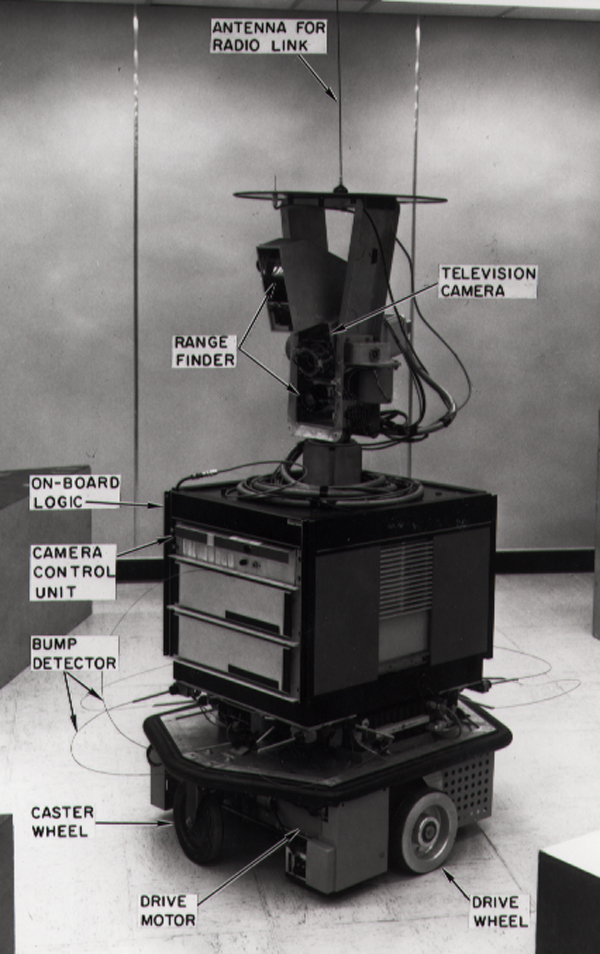

Shakey the Robot (~1966 – 1972)

Source: Wikipedia page for the Shakey Project

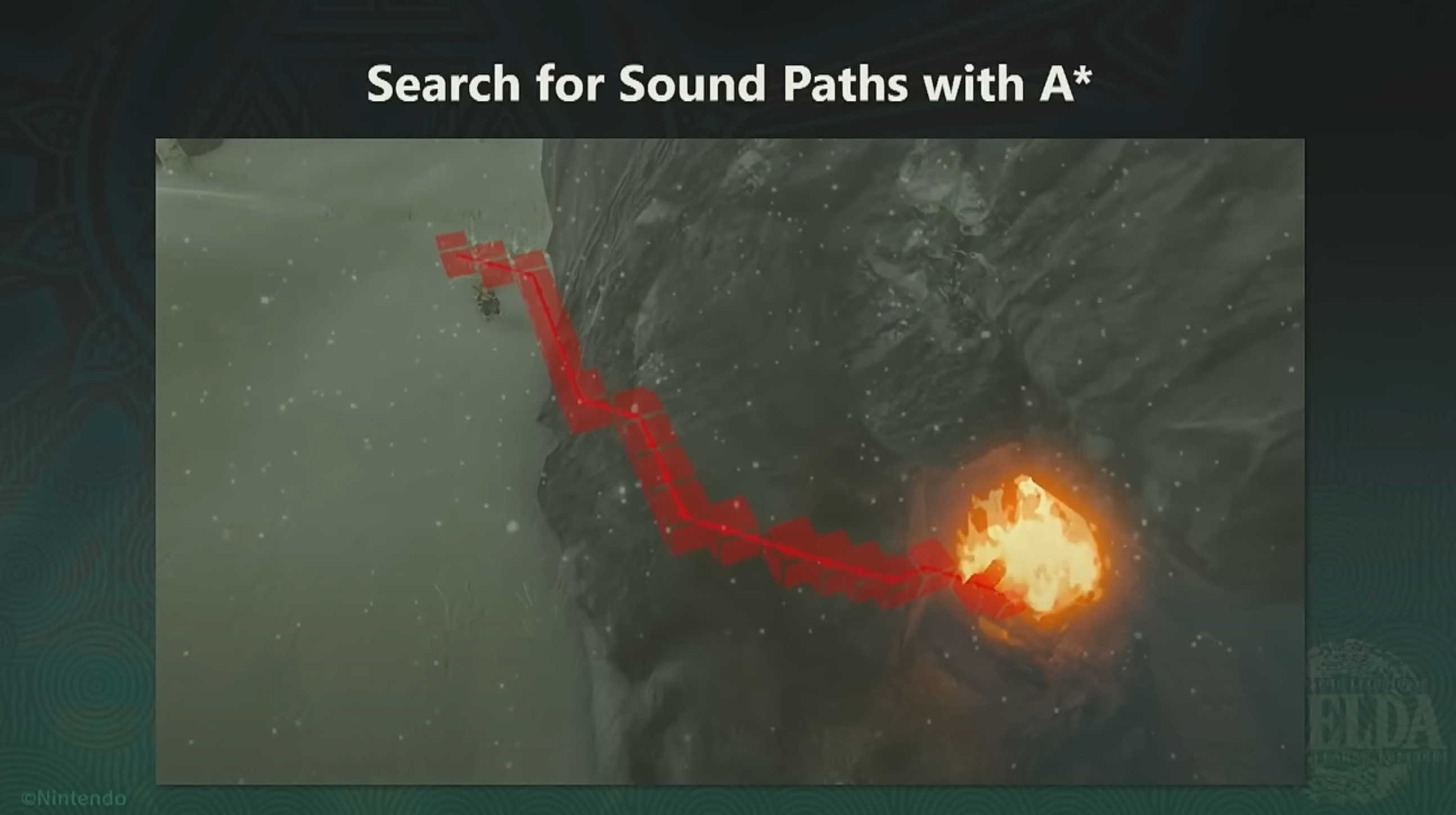

Route-finding I

At its core, a pathfinding method searches a graph by starting at one vertex and exploring adjacent nodes until the destination node is reached, generally with the intent of finding the cheapest route. Although graph searching methods such as a breadth-first search would find a route if given enough time, other methods, which “explore” the graph, would tend to reach the destination sooner. An analogy would be a person walking across a room; rather than examining every possible route in advance, the person would generally walk in the direction of the destination and only deviate from the path to avoid an obstruction, and make deviations as minor as possible. (Source: Wikipedia)

Used in every GPS/Navigation app and…

Source: Wikipedia page for the A* search algorithm.

Route-finding II

Tunes of the Kingdom: Evolving Physics and Sounds for ‘The Legend of Zelda: Tears of the Kingdom’, GDC 2024

Evaluating a chess game I

Who’s winning this game?

| 5 × 1 = 5 | |

| 0 × 3 = 0 | |

| 2 × 3 = 6 | |

| 2 × 5 = 10 | |

| 0 × 9 = 0 | |

| 1 × 0 = 0 | |

| White | 21 |

Evaluating a chess game II

Just add up the pieces for each player.

| 6 × 1 = 6 | |

| 1 × 3 = 3 | |

| 1 × 3 = 3 | |

| 2 × 5 = 10 | |

| 0 × 9 = 0 | |

| 1 × 0 = 0 | |

| Black | 22 |

Overall: 21 − 22 = −1.

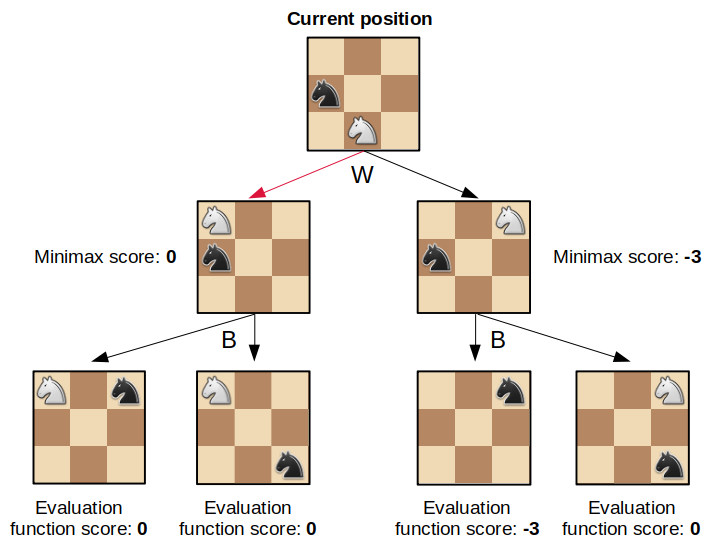

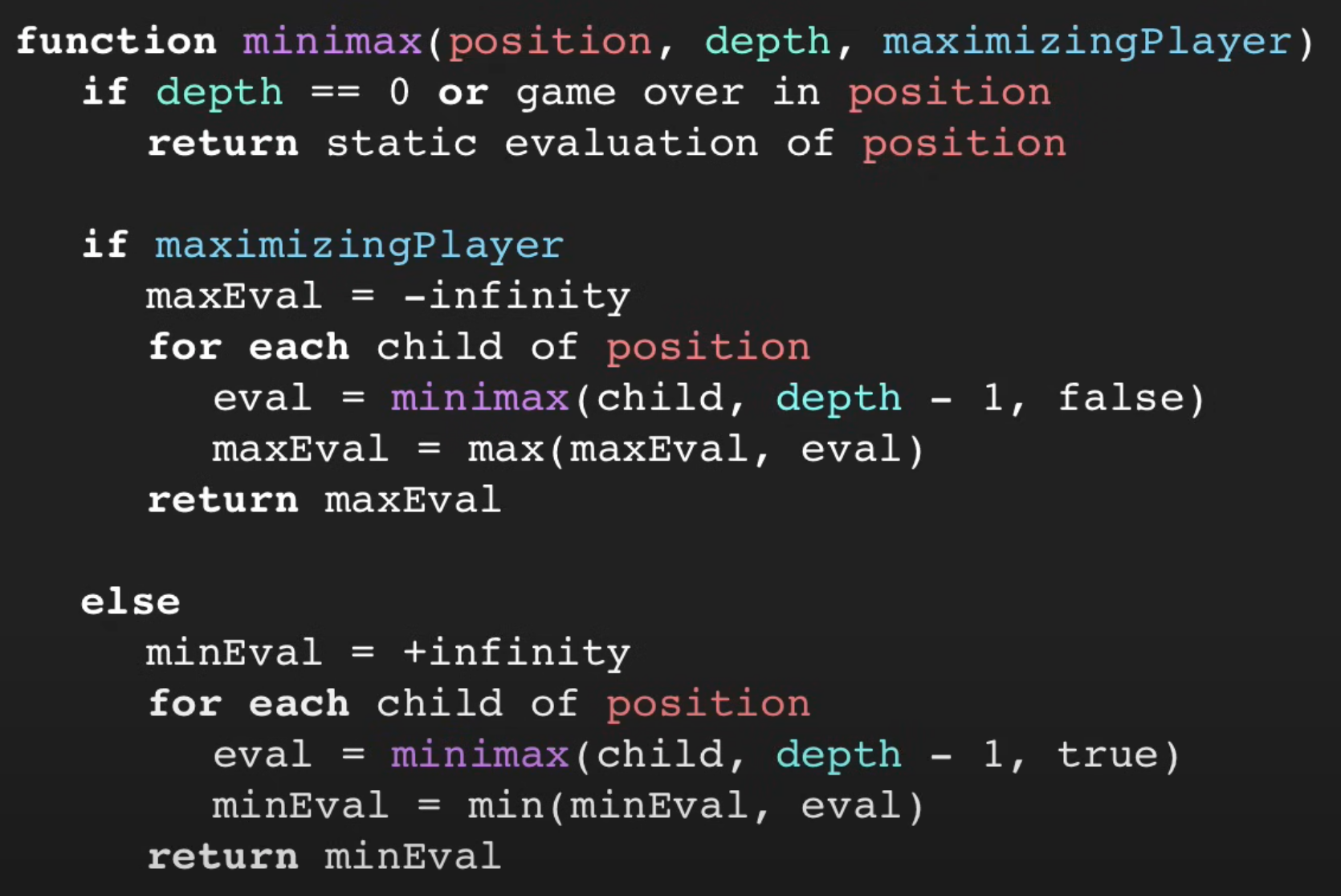

The minimax algorithm

Source: codeRtime, Programming a simple minimax chess engine in R, and Sebastian Lague (2018), Algorithms Explained – minimax and alpha-beta pruning.

Chess

Deep Blue (1997)

Sources: Mark Robert Anderson (2017), Twenty years on from Deep Blue vs Kasparov, The Conversation article, and Computer History Museum.

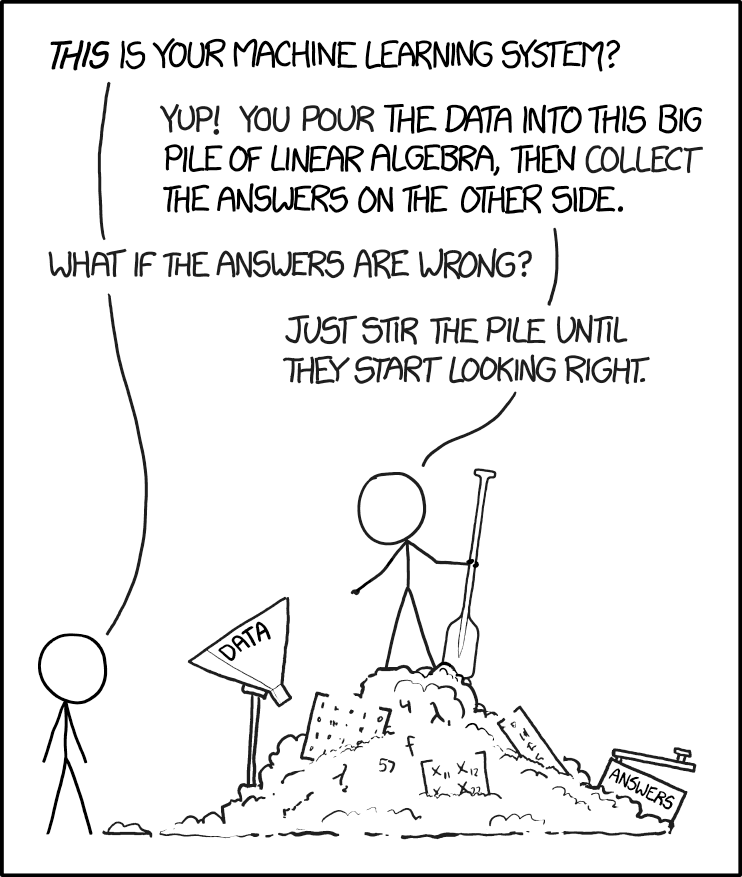

Machine Learning

Tried making a computer smart, too hard!

Make a computer that can learn to be smart.

The Venn diagram of Artificial Intelligence, Machine Learning, Neural Networks and Deep Learning. Adapted from [@shang2025artificial].

Definition

“[Machine Learning is the] field of study that gives computers the ability to learn without being explicitly programmed” Arthur Samuel (1959)

Source: Randall Munroe (2017), xkcd #1838: Machine Learning.

Deep Learning Successes (Images)

Lecture Outline

Artificial Intelligence

Deep Learning Successes (Images)

Deep Learning Successes (Text)

Classifying Machine Learning Tasks

Neural Networks

Image Classification I

What is this?

Options:

- punching bag

- goblet

- red wine

- hourglass

- balloon

Note

Hover over the options to see AI’s prediction (i.e. the probability of the photo being in that category).

Source: Wikipedia

Image Classification II

What is this?

Options:

- sea urchin

- porcupine

- echidna

- platypus

- quill

Source: Wikipedia

Image Classification III

What is this?

Options:

- dingo

- malinois

- German shepherd

- muzzle

- kelpie

Source: Wikipedia

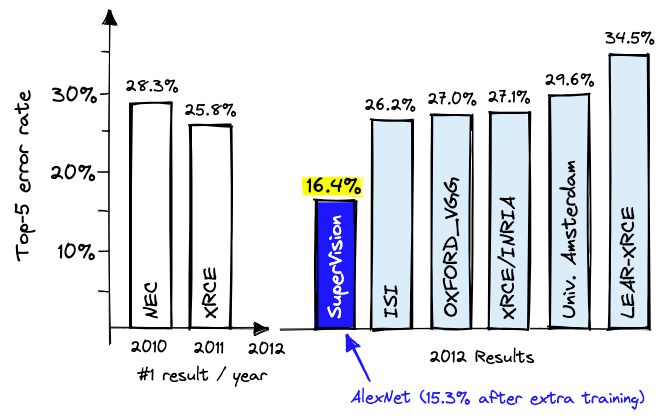

ImageNet Challenge

ImageNet and the ImageNet Large Scale Visual Recognition Challenge (ILSVRC); originally 1,000 synsets.

AlexNet — a neural network developed by Alex Krizhevsky, Ilya Sutskever, and Geoffrey Hinton — won the ILSVRC 2012 challenge convincingly.

Source: James Briggs & Laura Carnevali, AlexNet and ImageNet: The Birth of Deep Learning, Embedding Methods for Image Search, Pinecone Blog

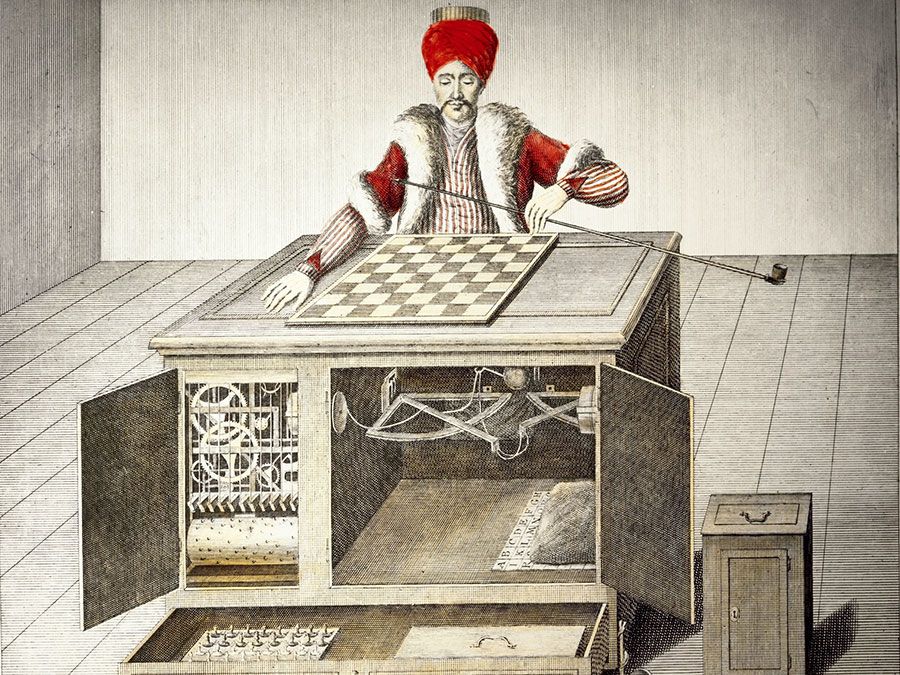

How were the images labelled?

“Two years later, the first version of ImageNet was released with 12 million images structured and labeled in line with the WordNet ontology. If one person had annotated one image/minute and did nothing else in those two years (including sleeping or eating), it would have taken 22 years and 10 months.

To do this in under two years, Li turned to Amazon Mechanical Turk, a crowdsourcing platform where anyone can hire people from around the globe to perform tasks cost-effectively.”

Sources: Editors of Encyclopaedia Britannica, The Mechanical Turk: AI Marvel or Parlor Trick?, and

James Briggs & Laura Carnevali, AlexNet and ImageNet: The Birth of Deep Learning, Embedding Methods for Image Search, Pinecone Blog

Needed a graphics card

A graphics processing unit (GPU)

“4.2. Training on multiple GPUs A single GTX 580 GPU has only 3GB of memory, which limits the maximum size of the networks that can be trained on it. It turns out that 1.2 million training examples are enough to train networks which are too big to fit on one GPU. Therefore we spread the net across two GPUs.”

Source: Krizhevsky, Sutskever and Hinton (2017), ImageNet Classification with Deep Convolutional Neural Networks, Communications of the ACM

Lee Sedol plays AlphaGo (2016)

Deep Blue was a win for AI, AlphaGo a win for ML.

Lee Sedol playing AlphaGo AI

I highly recommend this documentary about the event.

Source: Patrick House (2016), AlphaGo, Lee Sedol, and the Reassuring Future of Humans and Machines, New Yorker article.

Generative Adversarial Networks (2014)

https://thispersondoesnotexist.com/

Diffusion models

Source: Dall-E 2 images, prompts by ACTL3143 students in 2022.

Dall-E 2 (2022) vs Dall-E 3 (2023)

Same prompt: “A beautiful calm photorealistic view of an waterside metropolis that has been neglected for hundreds of years and is overgrown with nature”

Dall-E 3 rewrites it as: “Photo of a once-majestic metropolis by the water, now abandoned for centuries. The city’s skyscrapers and buildings are cloaked in thick green vines…”

Deep Learning Successes (Text)

Lecture Outline

Artificial Intelligence

Deep Learning Successes (Images)

Deep Learning Successes (Text)

Classifying Machine Learning Tasks

Neural Networks

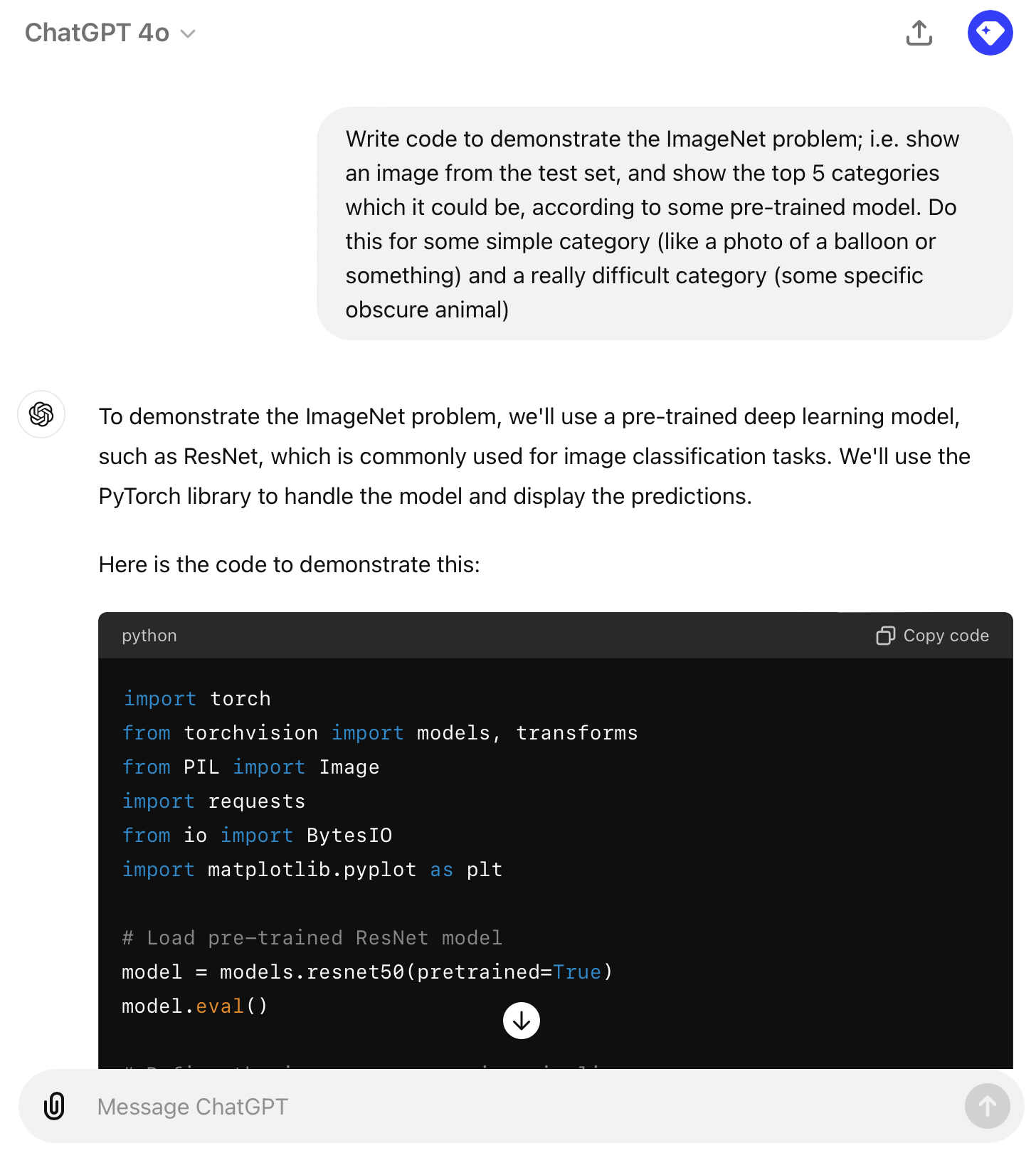

GPT

Homework Get ChatGPT to:

- generate images

- translate code

- explain code

- run code

- analyse a dataset

- critique code

- critique writing

- voice chat with you

Compare to Copilot.

Source: ChatGPT conversation.

Code generation (GitHub Copilot)

Source: GitHub Blog

Students get Copilot for free

A student post from last year:

I strongly recommend taking a photo holding up your Academic Statement to your phone’s front facing camera when getting verified for the student account on GitHub. No other method of taking/uploading photo proofs worked for me. Furthermore, I had to make sure the name on the statement matched my profile exactly and also had to put in a bio.

Good luck with this potentially annoying process!

Homework It’s a slow process, so get this going early.

Source: GitHub Education for Students

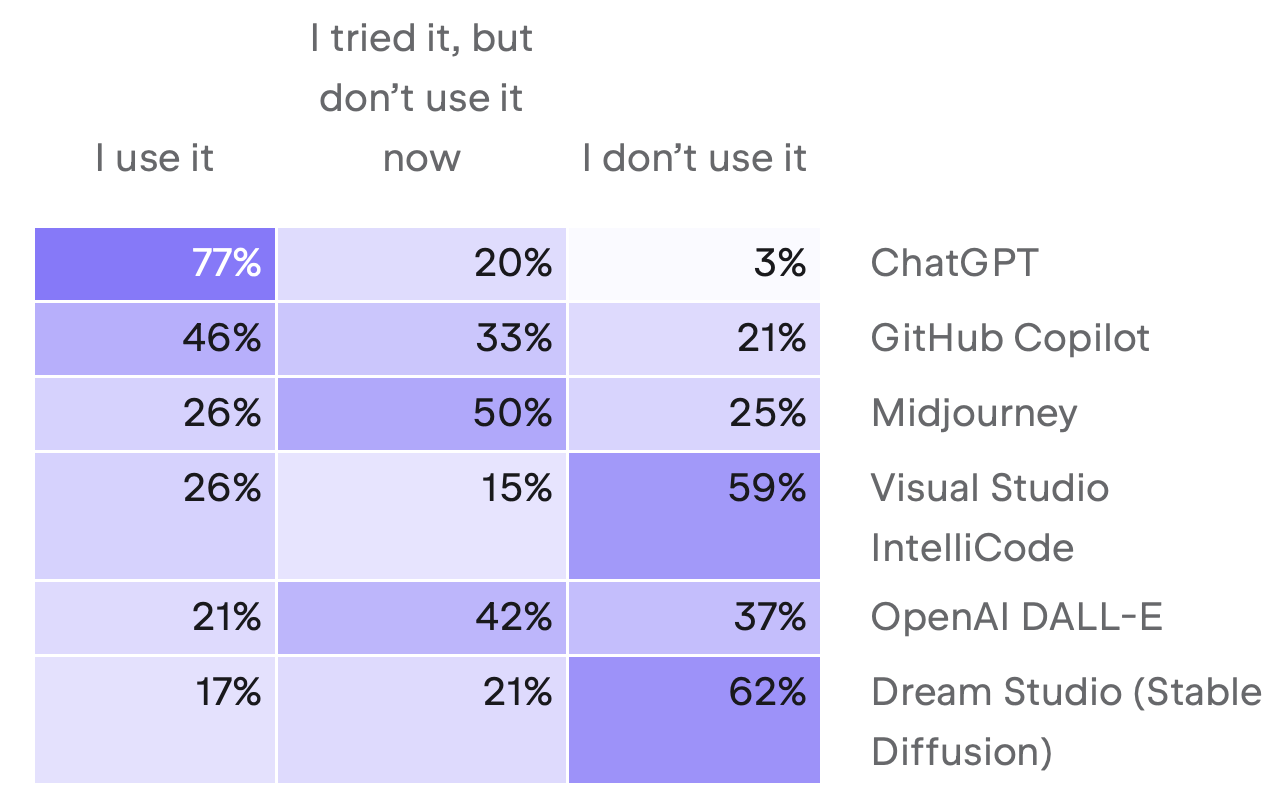

Programmers are increasingly using AI

Question: What is your experience with the following AI tools?

Source: JetBrains, The State of Developer Ecosystem 2023.

Classifying Machine Learning Tasks

Lecture Outline

Artificial Intelligence

Deep Learning Successes (Images)

Deep Learning Successes (Text)

Classifying Machine Learning Tasks

Neural Networks

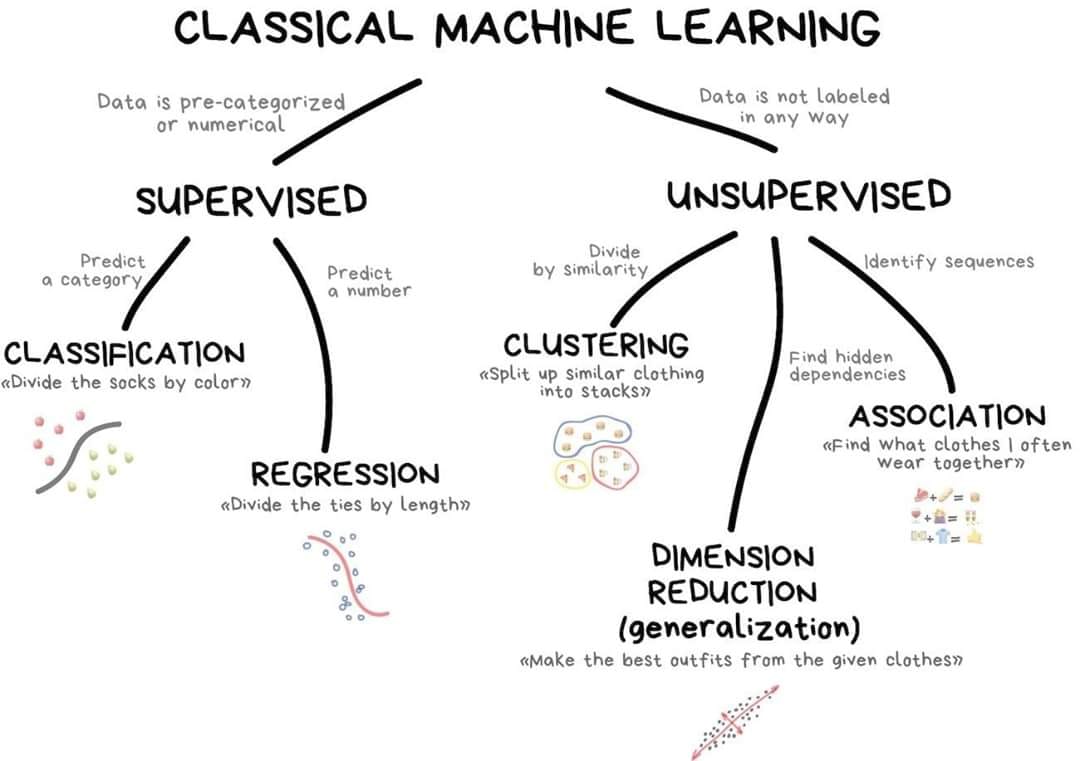

A taxonomy of problems

New ones:

- Reinforcement learning

- Semi-supervised learning

- Active learning

Source: Kaggle, Getting Started.

Supervised learning

The main focus of this course.

Regression

- Given policy \hookrightarrow predict the rate of claims.

- Given policy \hookrightarrow predict claim severity.

- Given a reserving triangle \hookrightarrow predict future claims.

Classification

- Given a claim \hookrightarrow classify as fraudulent or not.

- Given a customer \hookrightarrow predict customer retention patterns.

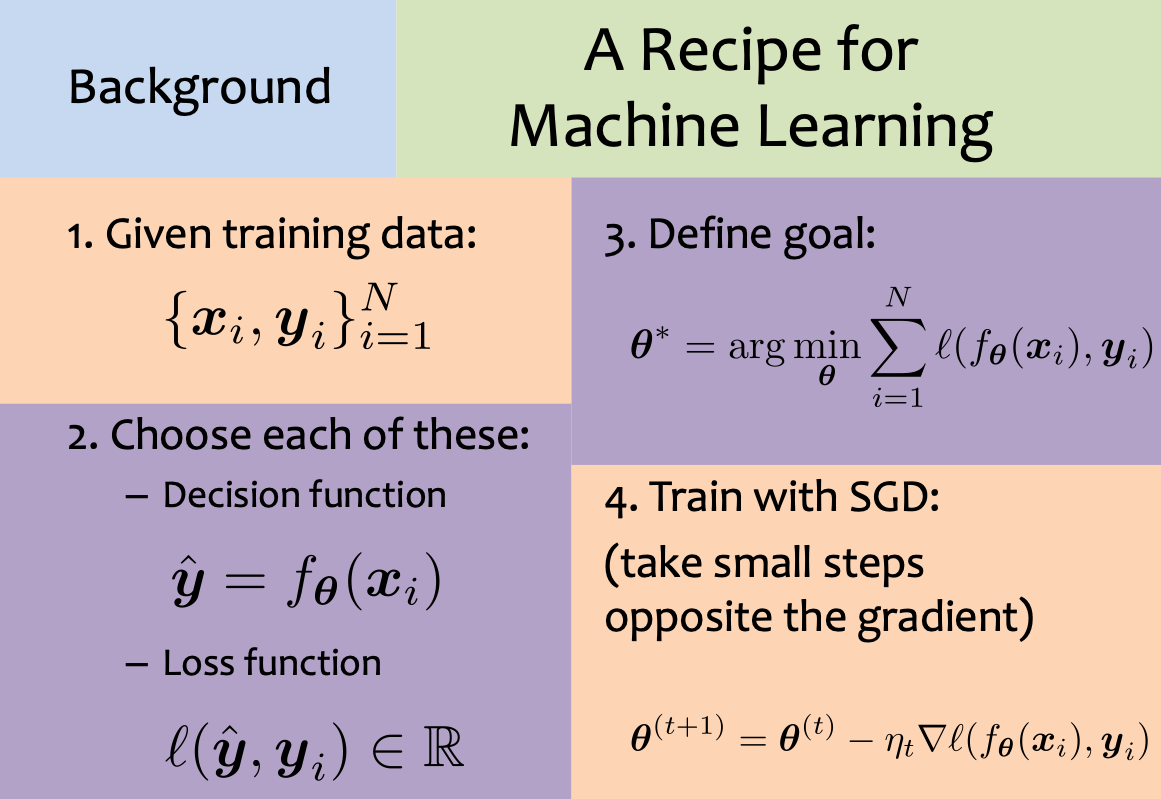

Supervised learning: mathematically

A recipe for supervised learning.

Source: Matthew Gormley (2021), Introduction to Machine Learning Lecture Slides, Slide 67.

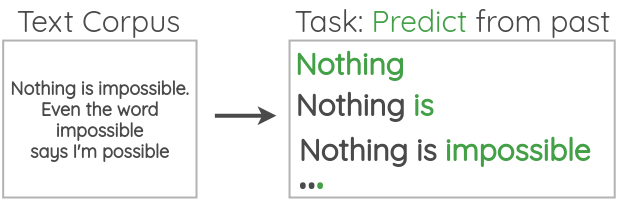

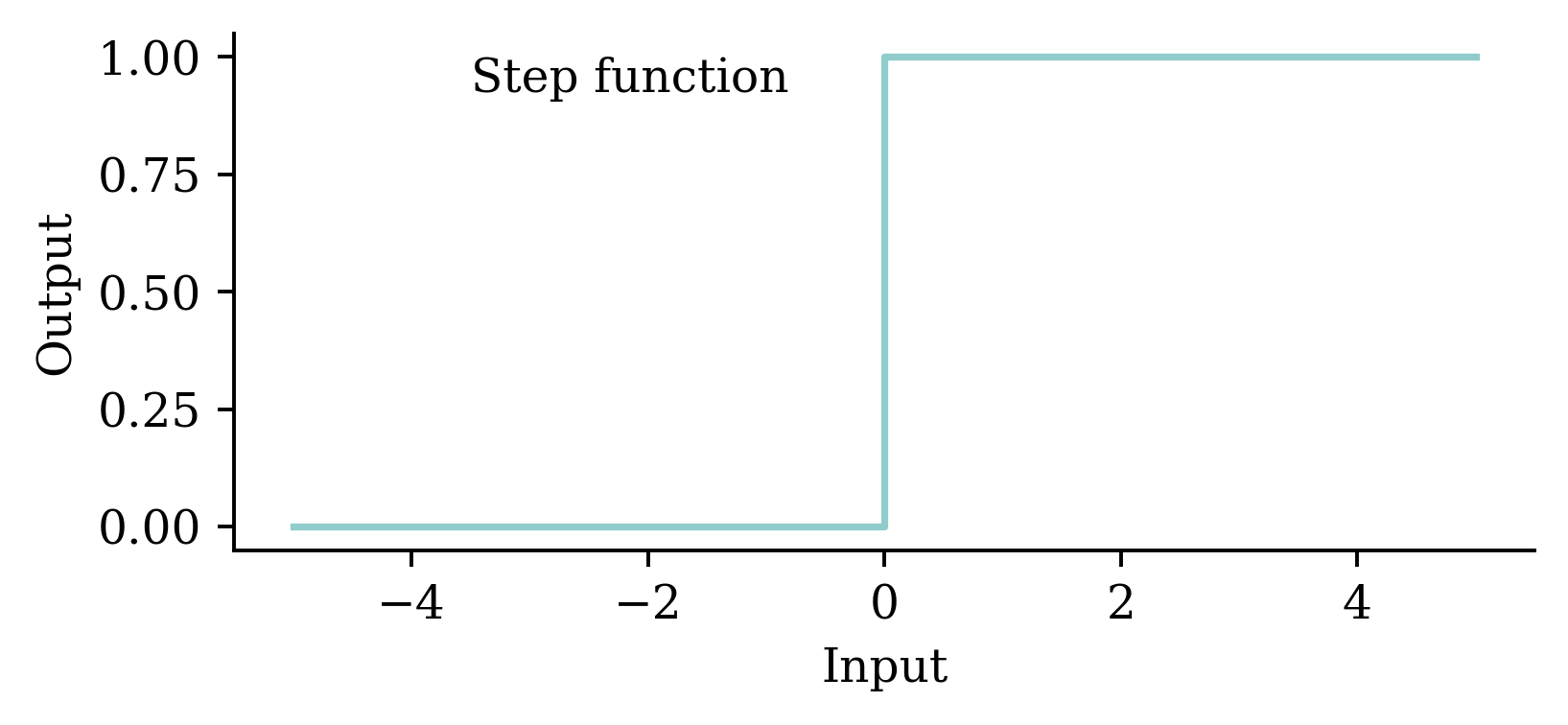

Self-supervised learning

Data which ‘labels itself’. Example: language model.

Source: Amit Chaudhary (2020), Self Supervised Representation Learning in NLP.

Example: image inpainting

Other examples: image super-resolution, denoising images.

See Liu et al. (2018), Image Inpainting for Irregular Holes using Partial Convolutions.

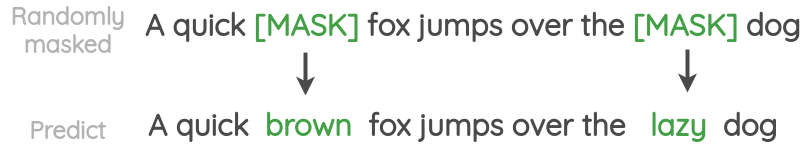

Example: Deoldify images #1

A deoldified version of the famous “Migrant Mother” photograph.

Source: Deoldify package.

Example: Deoldify images #2

A deoldified Golden Gate Bridge under construction.

Source: Deoldify package.

Neural Networks

Lecture Outline

Artificial Intelligence

Deep Learning Successes (Images)

Deep Learning Successes (Text)

Classifying Machine Learning Tasks

Neural Networks

How do real neurons work?

A neuron ‘firing’

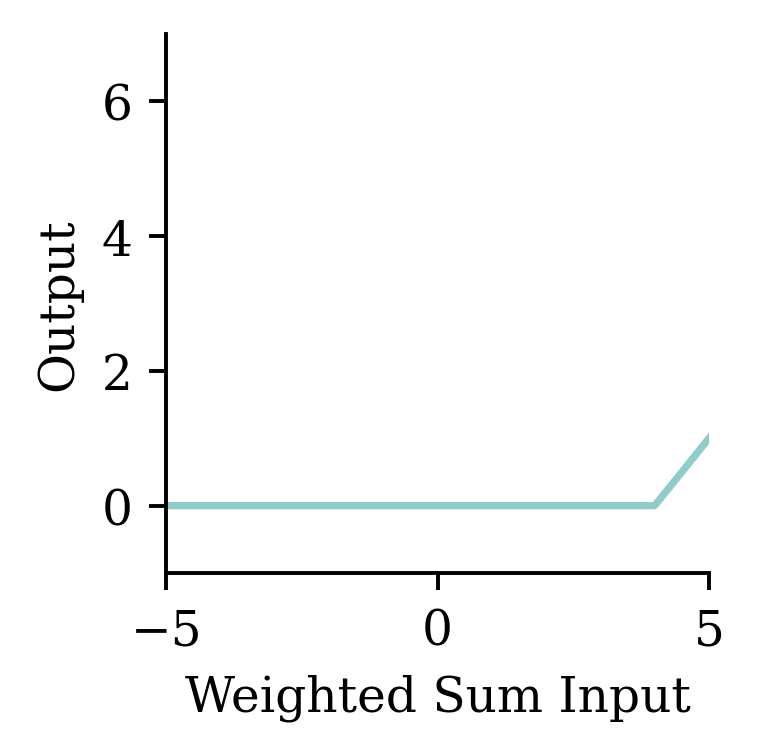

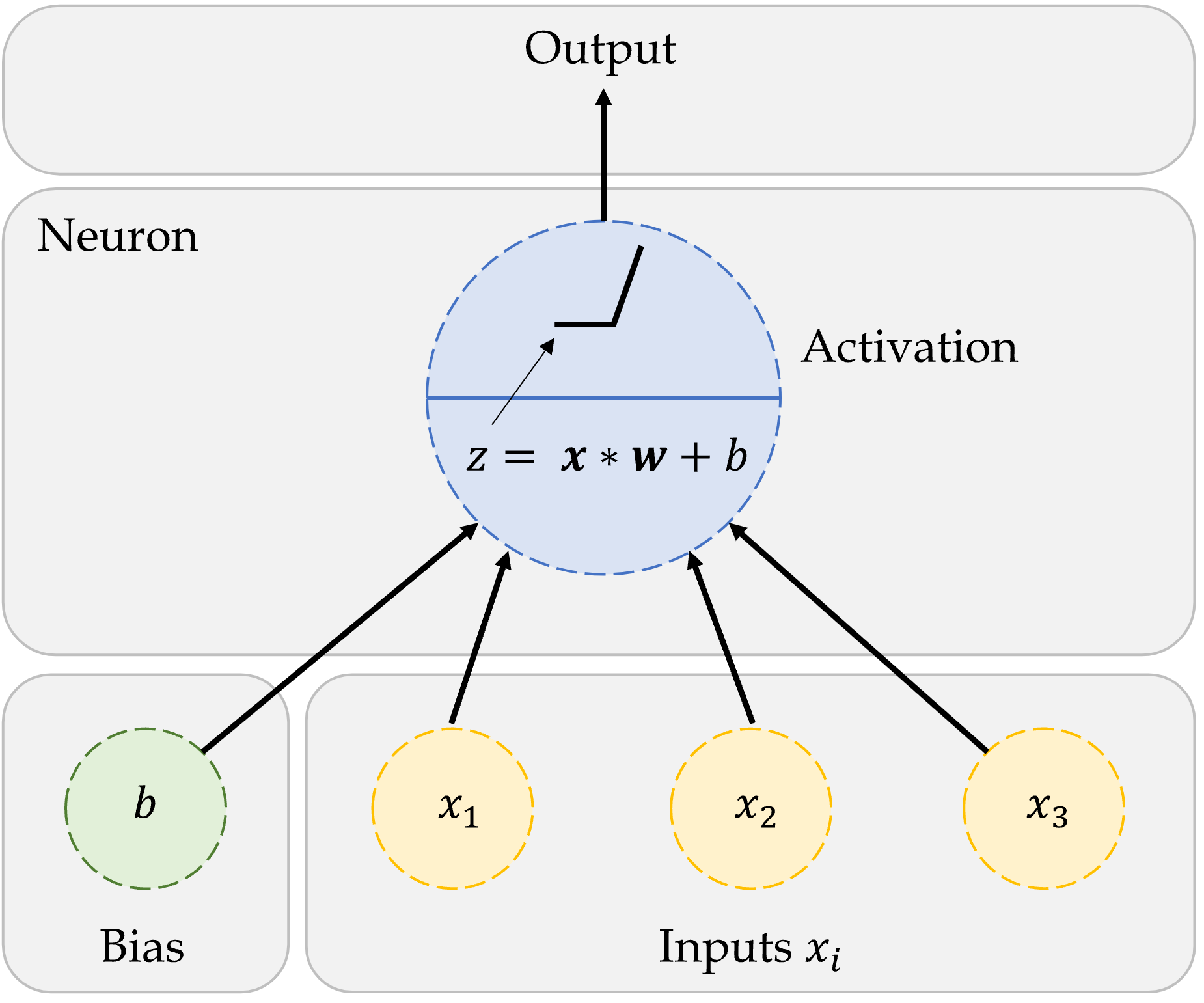

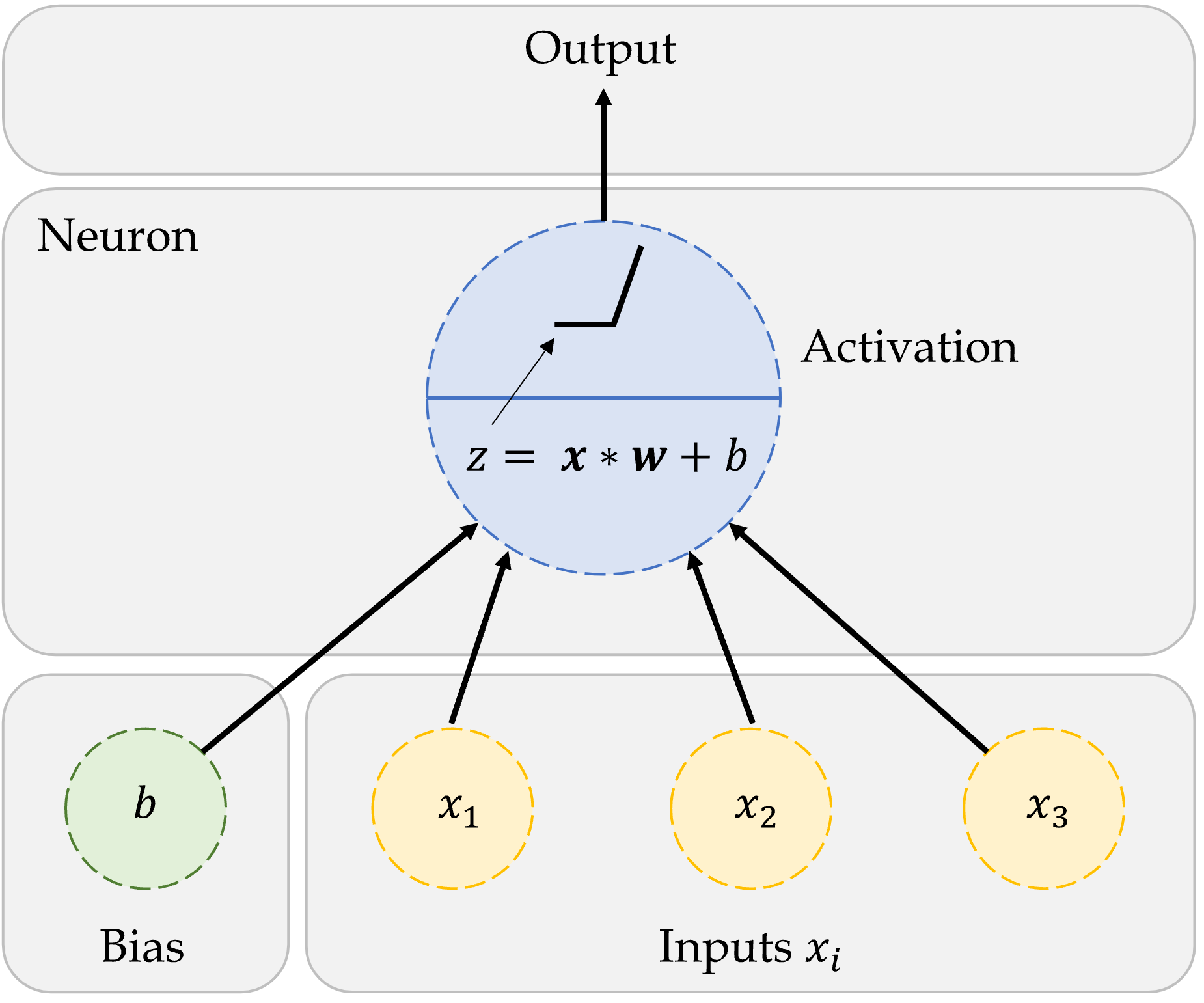

An artificial neuron

A neuron in a neural network with a ReLU activation.

Source: Marcus Lautier (2022).

One neuron

\begin{aligned} z~=~&x_1 \times w_1 + \\ &x_2 \times w_2 + \\ &x_3 \times w_3 . \end{aligned}

a = \begin{cases} z & \text{if } z > 0 \\ 0 & \text{if } z \leq 0 \end{cases}

Here, x_1, x_2, x_3 is just some fixed data.

The weights w_1, w_2, w_3 should be ‘learned’.

Source: Marcus Lautier (2022).

One neuron with bias

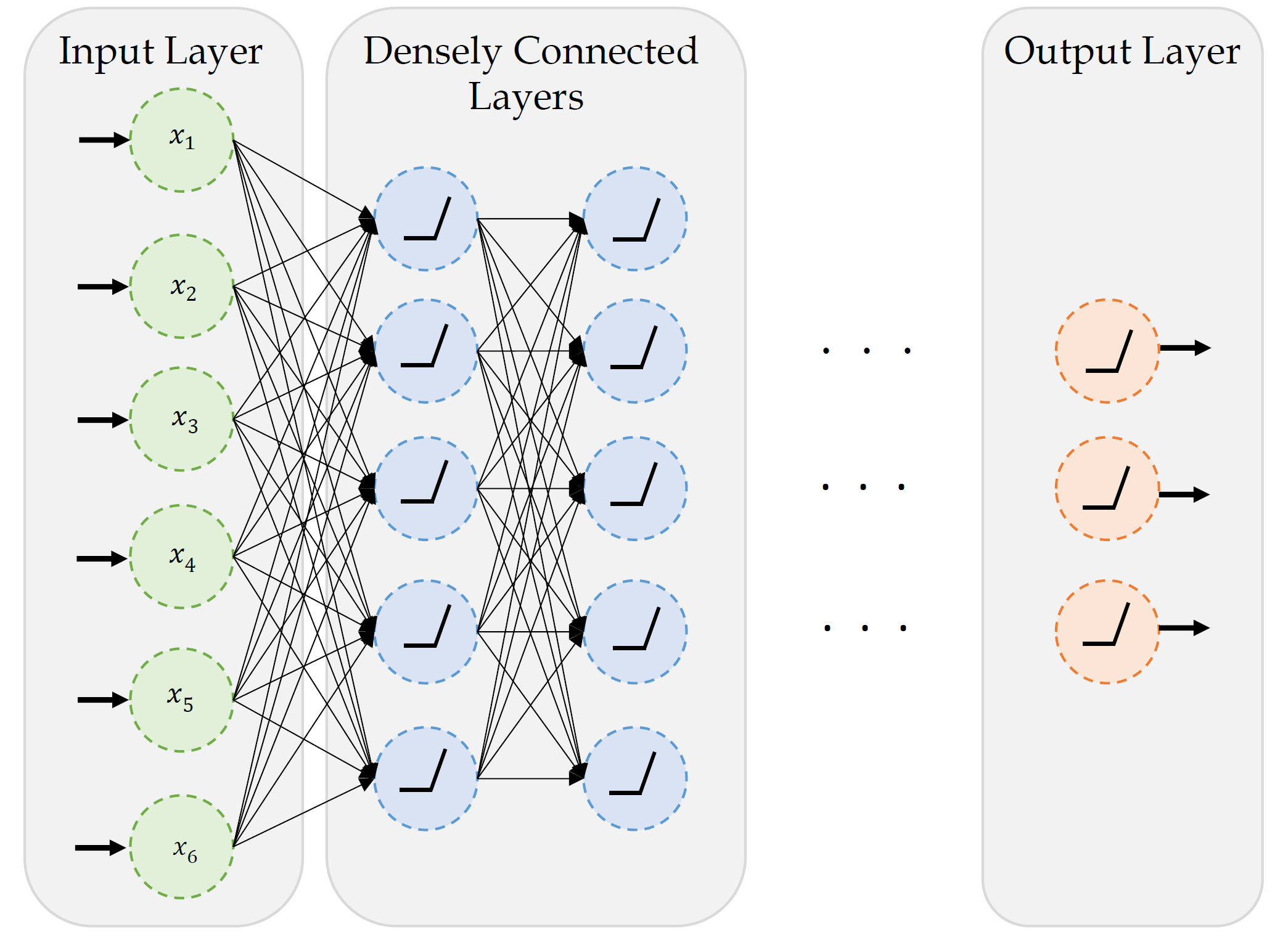

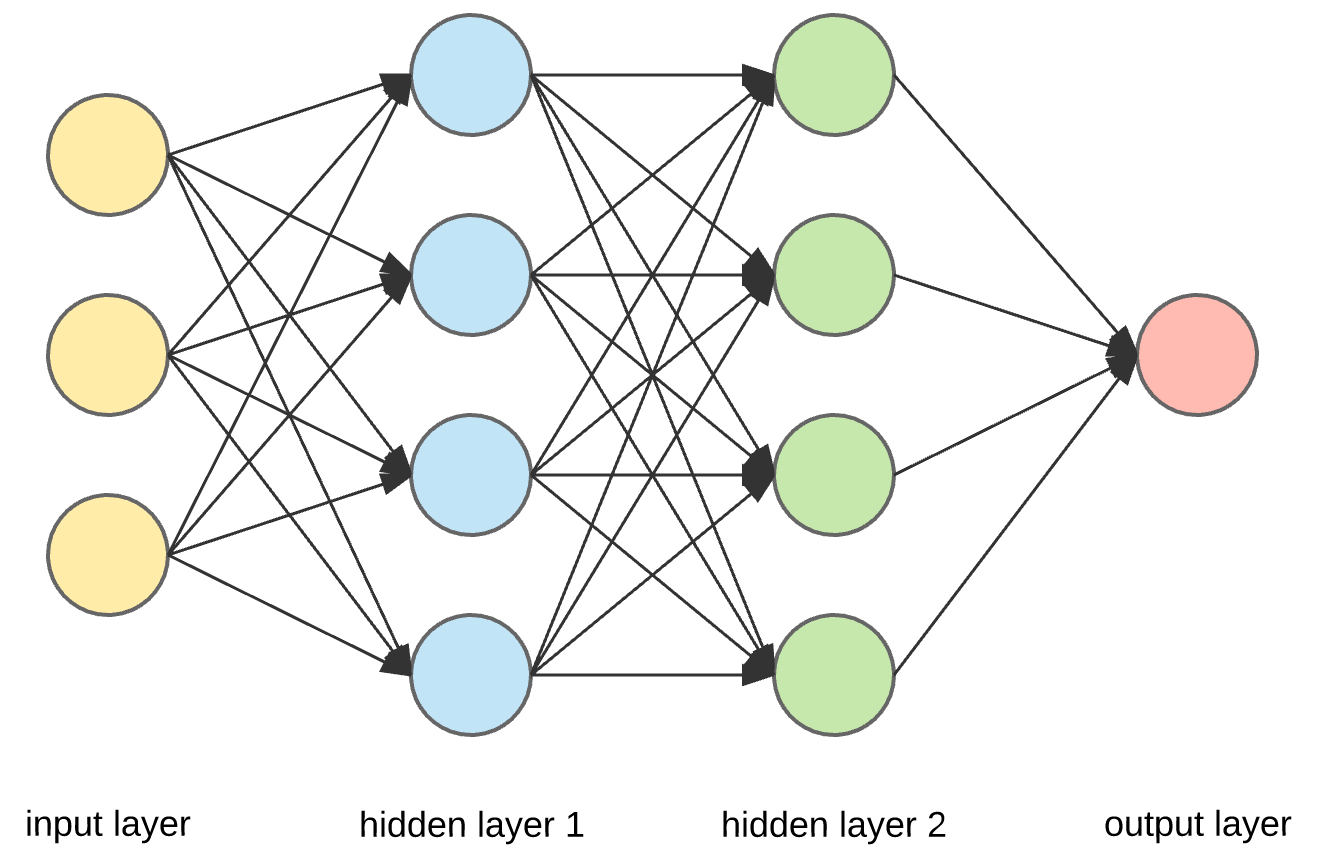

A basic neural network

A basic fully-connected/dense network.

Source: Marcus Lautier (2022).

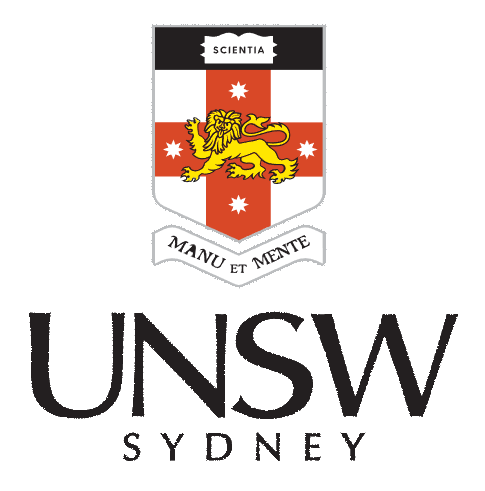

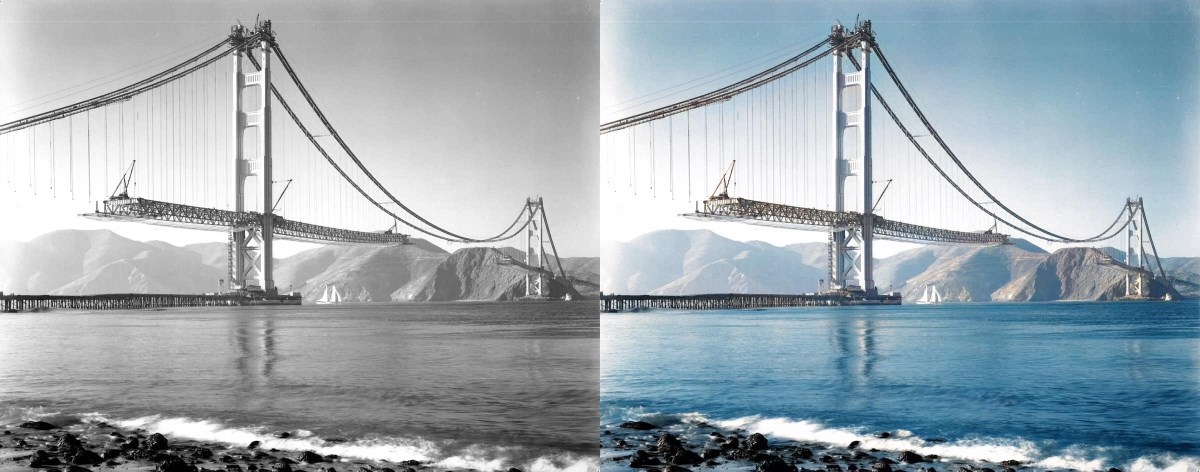

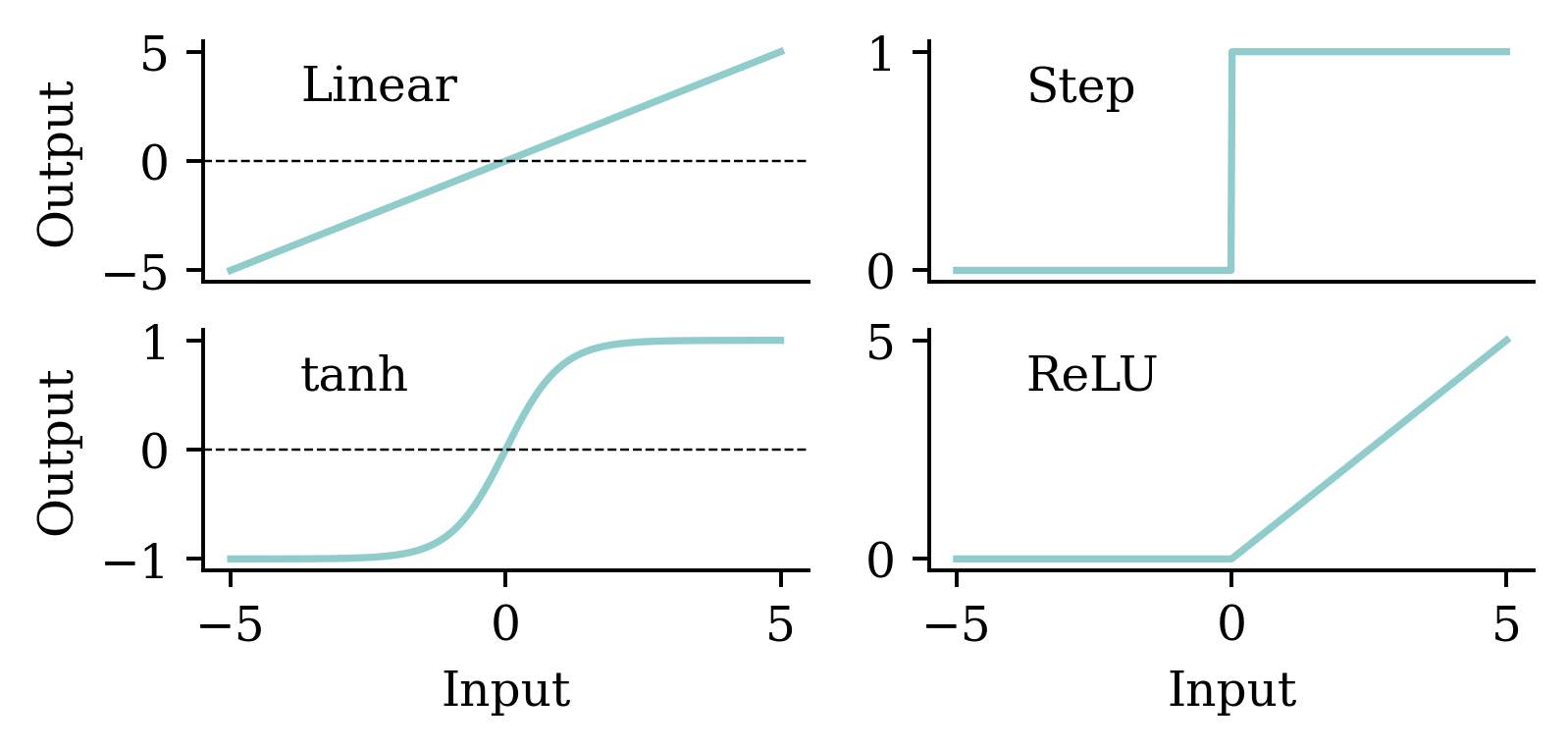

Step-function activation

Perceptrons

Brains and computers are binary, so make a perceptron with binary data. Seemed reasonable, impossible to train.

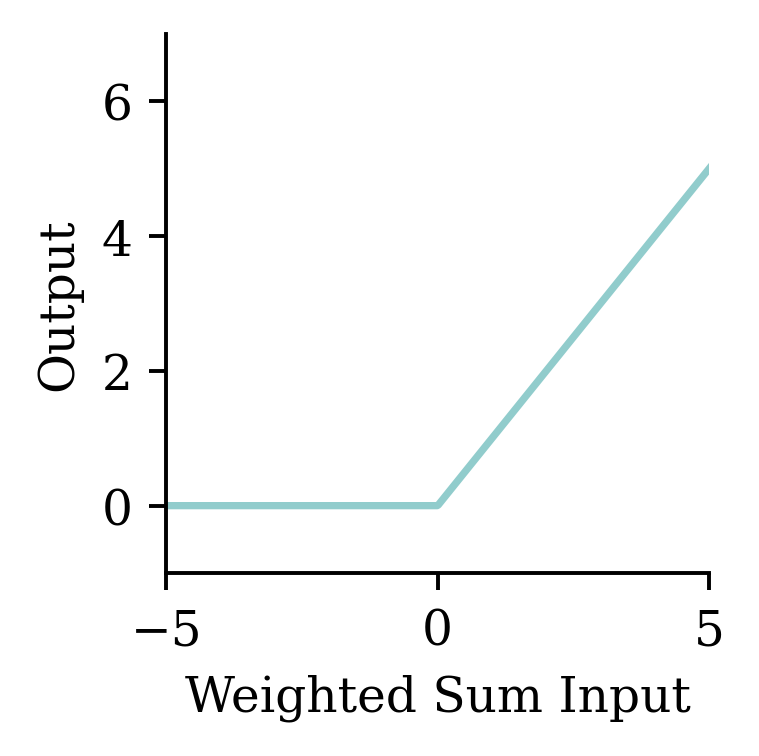

Modern neural network

Replace binary state with continuous state. Still rather slow to train.

Note

It’s a neural network made of neurons, not a “neuron network”.

Try different activation functions

Flexible

One can show that an MLP is a universal approximator, meaning it can model any suitably smooth function, given enough hidden units, to any desired level of accuracy (Hornik 1991). One can either make the model be “wide” or “deep”; the latter has some advantages…

Source: Murphy (2012), Machine Learning: A Probabilistic Perspective, 1st Ed, p. 566.

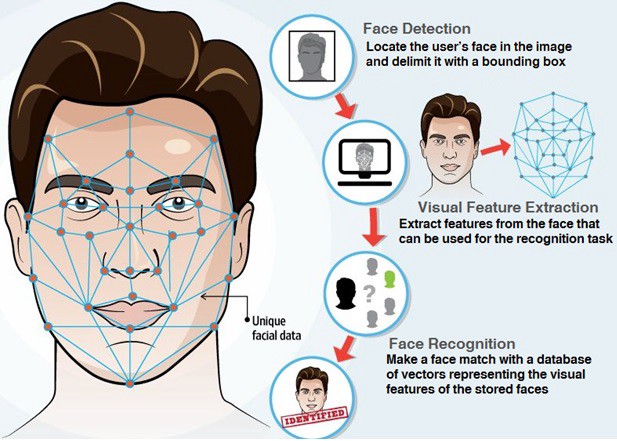

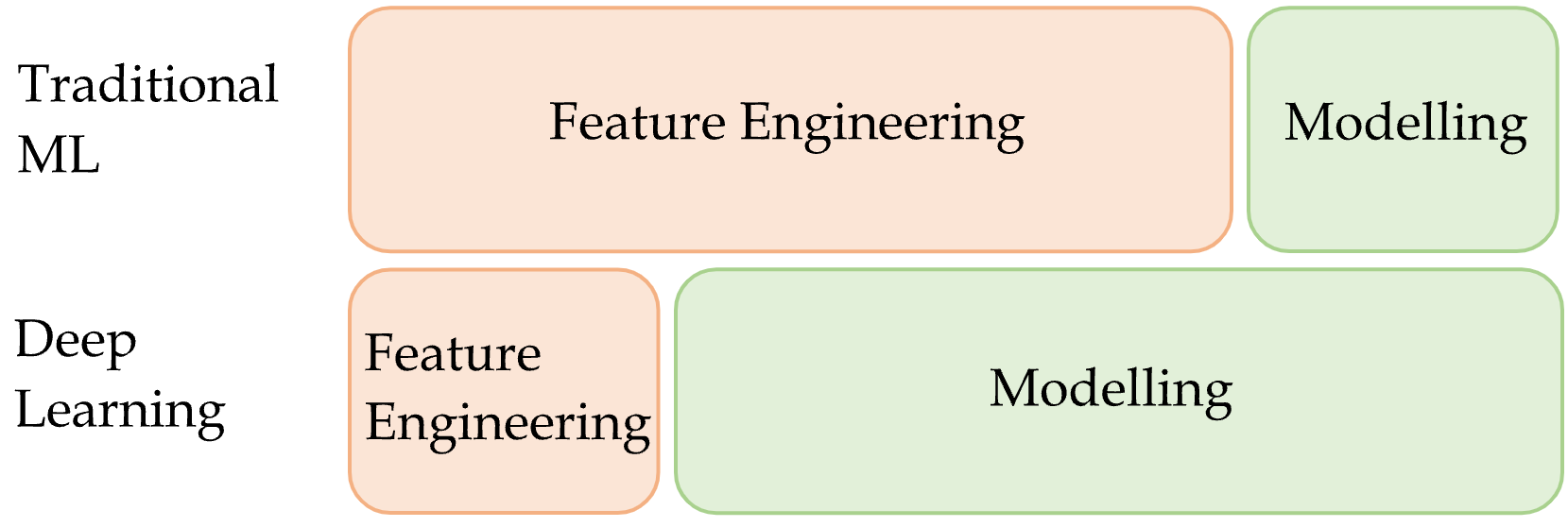

Feature engineering

Doesn’t mean deep learning is always the best option!

Sources: Marcus Lautier (2022) & Fenjiro (2019), Face Id: Deep Learning for Face Recognition, Medium.

Quiz

In this ANN, how many of the following are there:

- features,

- targets,

- weights,

- biases, and

- parameters?

What is the depth?

Source: Dertat (2017), Applied Deep Learning - Part 1: Artificial Neural Networks, Medium.

Package Versions

Python implementation: CPython

Python version : 3.13.11

IPython version : 9.10.0

keras : 3.10.0

matplotlib: 3.10.0

numpy : 2.4.2

pandas : 3.0.0

seaborn : 0.13.2

scipy : 1.17.0

torch : 2.10.0

Glossary

- activations, activation function

- artificial neural network

- biases (in neurons)

- classification problem

- deep network, network depth

- dense or fully-connected layer

- feed-forward neural network

- labelled/unlabelled data

- machine learning

- minimax algorithm

- neural network architecture

- perceptron

- ReLU

- representation learning

- sigmoid activation function

- targets

- weights (in a neuron)